"THIS MAY BE THE MOST IMPORTANT BOOK

ON HEALTH EVER WRITTEN"

- National Health Federation Bulletin -

THE

HEALING FACTOR

VITAMIN C

Against Disease

____________________________

By Irwin Stone

With forewords by Nobel Prizewinners

Dr. Linus Pauling

and

Dr. Albert Szent-Gyorgyi

___________________________________________________

Vitamin C may save your life! A noted biochemist reveals for laymen the exciting research into ascorbic acid's powers against such deadly enemies as cancer, heart disease, strokes, mental illness, old age, diabetes, arthritis, kidney disease, hepatitis -- even cigarette smoking!

AGING - ALLERGIES

ASTHMA - ARTHRITIS - CANCER

COLDS - DIABETES & HYPOGLYCEMIA

EYE TROUBLE - HEART DISEASE - STROKES

KIDNEY & BLADDER AILMENTS - MENTAL ILLNESS

STRESS SYNDROMES - POISONING - POLLUTION

ULCERS - VIRUSES

WOUNDS & FRACTURES

SHOCK

COULD THEY BE

THE RESULT OF VITAMIN C DEFICIENCY?

COULD THEY BE

PREVENTED BY TAKING MORE VITAMIN C?

COULD THEY BE TREATED WITH VITAMIN C?

IS VITAMIN C REALLY A VITAMIN?

IRWIN STONE SAYS YES, YES, YES AND NO!

After 40 years research, Irwin Stone unfolds his startling conclusion that an ancient genetic mutation has left the primate virtually alone among animals in not producing ascorbic acid (Vitamin C) in his own body. By treating it as a "minimum daily requirement" instead of the crucial enzyme it really is, we are living in a state of sub-clinical scurvy whose symptoms have been attributed to other ailments. The answer is to change our thinking about Vitamin C and consume enough to replenish this long-lost "healing factor." Stone illustrates, with massive documentation, Vitamin C's remarkable ability to fight disease, counteract the ill effects of pollution and prolong healthy life -- easily and inexpensively!

GD/Perigee Books

are published by

The Putnam Publishing Group

ISBN 0-399-50764-7

THE

HEALING FACTOR

______________________

"VITAMIN C"

Against Disease

Irwin Stone

A GD/Perigee Book

Perigee Books

are published by

The Putnam Publishing Group

200 Madison Avenue

New York, New York 10016

Copyright © 1972 by Irwin Stone

All rights reserved. This book, or parts thereof,

may not be reproduced in any form without permission.

Published simultaneously in Canada by General Publishing

Co. Limited, Toronto.

Library of Congress Catalog Number: 72-77105

ISBN 0-399-50764-7

First Perigee printing, 1982

Printed in the United States of America

This book is dedicated to my wife, Barbara whose patience and collaboration over the years made it possible.

CONTENTS

Forewords

Linus Pauling

Albert Szent-Gyorgyi

Acknowledgements

Introduction 1

Part I: Our Deadly Inheritance

1. The Beginnings of Life

2. From Fishes to Mammals

3. Our Ancestral Primate

4. The Evolution of Man

5. From Prehistory to the Eighteenth Century

6. The Nineteenth and Early Twentieth Centuries

7. Finding the Elusive Molecule

8. The Genetic Approach

9. Some Effects of Ascorbic Acid

10. "Correcting" Nature

Part II: Pathways to Research

11. Breaking the "Vitamin" Barrier

12. The Common Cold

13. Viral Infection

14. Bacterial Infection

15. Cancer

16. The Heart, Vascular System, and Strokes

17. Arthritis and Rheumatism

18. Aging

19. Allergies, Asthma, and Hay Fever

20. Eye Conditions

21. Ulcers

22. Kidneys and Bladder

23. Diabetes and Hypoglycemia

24. Chemical Stresses -- Poisons, Toxins

25. Physical Stresses

26. Pollution and Smoker's Scurvy

27. Wounds, Bone Fractures, and Shock

28. Pregnancy

29. Mental Disease

30. The Future

References Cited from the Medical Literature

Glossary

The numerals set off in parenthesis in the text are intended to guide the reader to the appropriate medical citation listed at the end of the book.

FOREWORD

by Linus Pauling, Ph.D.

Nobel Laureate

This is an important book -- important to laymen, and important to physicians and scientists interested in the health of people.

Irwin Stone deserves much credit for having marshalled the arguments that indicate that most human beings have been receiving amounts of ascorbic acid less than those required to put them in the best of health. It is his contention, and it is supported by much evidence, that most people in the world have a disease involving a deficient intake of ascorbic acid, a disease that he has named hypoascorbemia. This disease seems to be present because of an evolutionary accident that occurred many millions of years ago. Ancestors of human beings (and of their close present-day relatives, other primates) were living in an area where the natural foods available provided very large amounts of ascorbic acid (very large in comparison with the amounts usually ingested now and the amounts usually recommended now by physicians and other authorities on nutrition). A mutation occurred that removed from the mutant the ability to manufacture ascorbic acid within his own body. Circumstances were such that the mutant had an evolutionary advantage over the other members of the population, who were burdened with the machinery for manufacturing additional ascorbic acid. The result was that the part of the population burdened with this machinery gradually died out, leaving the mutants, who depended upon their food for an adequate supply of ascorbic acid.

As man has spread over the earth and increased in number, the supplies of ascorbic acid have decreased. It is possible that most people in the world receive only one or two percent of the amounts of ascorbic acid that would keep them in the best of health. The resulting hypoascorbemia may be responsible for many of the illnesses that plague mankind.

In this book, Irwin Stone summarizes the evidence. The publication of Irwin Stone's papers and of this book may ultimately result in a great improvement in the health of human beings everywhere, and a great decrease in the amount of suffering caused by disease.

- Linus Pauling -

FOREWORD

by Albert Szent-Gyorgyi, M.D., Ph.D.

Nobel Laureate

My own interest in ascorbic acid centered around its role in vegetable respiration and defense mechanisms. All the same, I always had the feeling that not enough use was made of it for supporting human health. The reasons were rather complex. The medical profession itself took a very narrow and wrong view. Lack of ascorbic acid caused scurvy, so if there was no scurvy there was no lack of ascorbic acid. Nothing could be clearer than this. The only trouble was that scurvy is not a first symptom of lack but a final collapse, a premortal syndrome, and there is a very wide gap between scurvy and full health. But nobody knows what full health is! This could be found out by wide statistical studies, but there is no organization which could and would arrange such studies. Our society spends billions or trillions on killing and destruction but lacks the relatively modest means demanded to keep its own health and prime interest cared for. Full health, in my opinion, is the condition in which we feel best and show the greatest resistance to disease. This leads us into statistics which demand organization. But there is another, more individual difficulty. If you do not have sufficient vitamins and get a cold, and as a sequence pneumonia, your diagnosis will not be "lack of ascorbic acid" but "pneumonia." So you are waylaid immediately.

I think that mankind owes a serious thanks to Irwin Stone for having kept the problem alive and having called Linus Pauling's attention to it.

On my last visit to Sweden, I was told that the final evidence has been found that ascorbic acid is quite harmless. An insane person had the fixed idea that he needed ascorbic acid so he swallowed incredible amounts of it for a considerable period without ill effects. So, apart from very specific conditions, ascorbic acid cannot hurt you. It does not hurt your pocket either, since it is very cheap. It is used for spraying trees.

I also fully agree with Dr. Pauling's contention that individual needs for vitamin C vary within wide limits. Some may need high doses, others may be able to get along with less, but the trouble is that you do not know to which group you belong. The symptoms of lack may be very different. I remember my correspondence with a teacher in my earlier days who told me that he had an antisocial boy whom he was unable to deal with. He gave him ascorbic acid and the boy became one of his most easygoing, obedient pupils. Nor does wealth and rich food necessarily protect against lack of vitamins. I remember my contact with one of the wealthiest royal families of Europe where the young prince had constant temperature and had poor health. On administering vitamin C, the condition readily cleared up.

It gives me great satisfaction to see this book appear and I hope very much that its message will be understood.

- Albert Szent-Gyorgyi -

ACKNOWLEDGMENTS

This book took many years to write and involved many people. Because of a nonexistent budget and the fact that much of the data was in foreign languages, good friends had to be relied upon to supply translations. Among these friends were Lotte and George Bernard, Helene Gottlieb, Dorothy Kramer, Irving Minton, Jutta Nigrin, Sal Scaturo, Tanya Ronger, and Natasha and Otmar Silberstein.

Invaluable help and advice on library work were supplied by Eliphal Streeter and Vera Mitchell Throckmorton. The medical library of the Statin Island Public Health Hospital and the reprint facilities of the National Library of Medicine and the Medical Research Library of Brooklyn were especially helpful.

In any radically new scientific concept, encouragement nd inspiration to carry on are difficult to come by. The author was fortunate in having men of scientific or medical stature such as Linus Pauling, Albert Szent-Gyorgyi, Frederick R. Klenner, Abram Hoffer, William J. McCormick, Thomas A. Garrett, Walter A. Schnyder, Louis A. Wolfe, Alexander F. Knoll, Marvin D. Steinberg, Benjamin Kramer, and A. Herbert Mintz as pillars of strength. Miriam T. Malakoff and Martin Norris supplied editorial advice and encouragement. My wife Barbara, in the latter years, handled the bulk of the library research. To all these people and to many others who have contributed, go my deep gratitude and thanks. I trust that their efforts effectively contribute to better health for man.

Discovery consists in seeing what everybody else has seen and thinking what nobody has thought.

Albert Szent-Gyorgyi

INTRODUCTION

The purpose of this book is to correct an error in orientation which occurred in 1912, when ascorbic acid, twenty years before its actual discovery and synthesis, was designated as the trace nutrient, vitamin C. Thus, in the discussions in this book the terms "vitamin C" and "ascorbic acid" are identical, although the author prefers to use "ascorbic acid."

Scurvy, in 1912, was considered solely as a dietary disturbance. This hypothesis has been accepted practically unchallenged and has dominated scientific and medical thinking for the past sixty years. The purpose of this vitamin C hypothesis was to produce a rationale for the conquest of frank clinical scurvy. That it did and with much success, using minute doses of vitamin C. Frank clinical scurvy is now a rare disease in the developed countries because the amounts of ascorbic acid in certain foodstuffs are sufficient for its prevention. However, in the elimination of frank clinical scurvy, a more insidious condition, subclinical scurvy, remained; since it was less dramatic, it was glossed over and overlooked. Correction of subclinical scurvy needs more ascorbic acid than occurs naturally in our diet, requiring other non-dietary intakes. Subclinical scurvy is the basis for many of the ills of mankind.

Because of this uncritical acceptance of a misaligned nutritional hypothesis, the bulk of the clinical research on the use of ascorbic acid in the treatment of diseases other than scurvy has been more like exercises in home economics than in the therapy of the sequelae of a fatal, genetic liver-enzyme disease. One of the objects of this book is to take the human physiology of ascorbic acid out of the dead-end of nutrition and put it where it belongs, in medical genetics. In medical genetics, wide vistas of preventive medicine and therapy are opened up by the full correction of this human error of carbohydrate metabolism.

For the past sixty years a vast amount of medical data has been collected relating to the use of ascorbic acid in diseases other than scurvy, but only very little practical therapeutic information has developed pertaining to its successful use in these diseases. The reader may well ask what is the difference between data and information? This can be illustrated by the following example: the number 382,436 is just plain data, but 38-24-36, that is information.

The most probable reason for the paucity of definitive therapeutic ascorbic acid information in the therapy of diseases other than scurvy is related to the fact that the vitamin C-oriented investigators were trying to relieve a trace-vitamin dietary disturbance and never used doses large enough to be pharmacologically and therapeutically effective. The new genetic concepts currently correct this old, but now obvious, mistake by supplying a logical rationale for these larger, pharmacologically effective treatments.

If the research suggestions contained in this book are properly and conscientiously followed through, it is the hope of the author that future medical historians may consider this as a major breakthrough in medicine of the latter quarter of the twentieth century.

While many scientific and medical papers have appeared, the publication of Dr. Linus Pauling's book, Vitamin C and the Common Cold, in late 1970 was the first scientific book ever published in the new medical fields of megascorbic prophylaxis and megascorbic therapy, which are branches of orthomolecular medicine. Dr. Pauling's book paved the way for this volume.

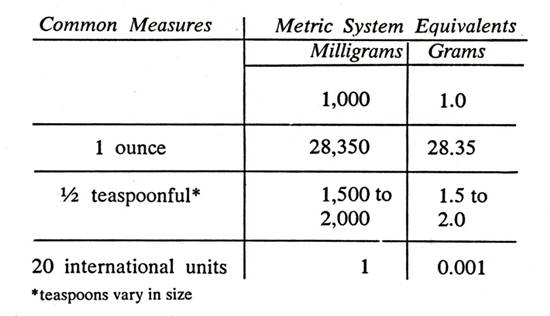

Since the size of the daily intake of ascorbic acid is so important in the later discussions, the reader can refer to the following table of equivalents. The dosages are usually expressed in the metric system in milligrams or grams of ascorbic acid:

PART I

____________________________________________________________

Our Deadly Inheritance

1

____________________________________________________________

The first part of this book is a scientific detective story. The corpus delicti is a chemical molecule, and to collect the evidence in this case we have to cover billions of years in time and have to search in such odd places as frog kidneys, goat livers, and "cabbages and kings." The search will be rewarding because it will contribute to the understanding of this tremendously important molecule. The evidence we unearth will show that the lack of this molecule in humans has contributed to more deaths, sickness, and just plain misery than any other single factor in man's long history. when the molecule is finally discovered and assigned its rightful place in the scheme of things, and its potentialities for good are fully realized, undreamed-of vistas of exuberant health, freedom from disease, and long life will be opened up.

To start on the first leg of our journey, we will have to get into our Time Machine, set the dials, and go back 2.5 to 3 billion years. It will be necessary to seal ourselves completely in the Time Machine and carry a plentiful supply of oxygen because the atmosphere in those days was very different from what it is now. It will be hot and steamy, with little or no oxygen, and besides much water vapor, will contain notable quantities of gases such as carbon dioxide, methane, and ammonia. The hot seas will contain the products of the chemical experiments that Nature had been conducting for millions of years. If we are fortunate, we will arrive on the scene just as Nature was preparing to launch one of its most complicated and organized experiments -- the production of living matter. If we were to sample the hot sea and examine it with our most powerful electron microscope, we would find in this thin consomme' the culmination of these timeless chemical experiments in the form of a macromolecule having the property of being able to make exact duplicates of itself. The term "macromolecule" merely means a huge molecule which is formed out of a conglomeration of smaller unit molecules. The process of forming these huge molecules from the smaller units is called "polymerization," and is similar to building a brick wall (the macromolecule) from bricks (the smaller unit molecules). The "cement" holding the unit molecules together consists of various chemical and physical attractive forces of varying degrees of tenacity.

This self-reproducing macromolecule in this primordial soup might resemble some of our present-day viruses, but it had many important biochemical and biophysical problems to solve before it would begin to resemble some of the more primitive forms of life, such as bacteria, as we know them today. Nature had plenty of time to experiment and eventually came up with successful solutions to problems like heredity, enzyme formation, energy conservation, a protective covering for these naked macromolecules, and then cellular and multicellular organisms. The problem of heredity was solved so successfully by these early self-duplicating macromolecules that our present basis of heredity, the macromolecule DNA, is probably little changed from its original primordial form.

Enzyme formation was a problem that required an early solution if life was to continue evolving, since enzymes are the very foundation of the life process. An enzyme is a substance produced by a living organism which speeds up a specific chemical reaction. A chemical transformation that would require years to complete can be performed in moments by the mere presence of an enzyme. Enzymes are utilized by all living organisms to digest food, transform energy, synthesize tissues, and conduct nearly every biochemical reaction in the life process. The body contains thousands of enzymes.

Energy conservation and utilization was neatly solved in some of these early life forms by the development of photosynthesis: an enzymatic process which uses the energy of sunlight to transform carbon dioxide and water into carbohydrates. Carbohydrates are used for food and structural purposes, and these primitive forms evolved into the vast species of the plant kingdom.

At some time early in the development of life, certain primitive organisms developed the enzymes needed to manufacture a unique substance that offered many solutions to the multiple biological problems of survival. This compound, ascorbic acid, is a relatively simple one compared to the many other huge, complicated molecules produced by living organisms. Because of its unique properties, however, it is somewhat unstable and transient, a fact that will complicate our later search for this substance.

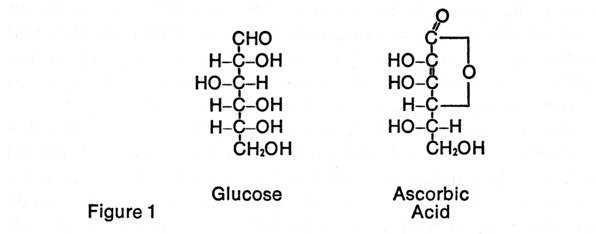

We now know that ascorbic acid is a

carbohydrate derivative containing six carbon atoms, six oxygen atoms, and

eight hydrogen atoms and is closely related to the sugar, glucose (see Figure

1). Glucose is of almost universal occurrence in living organisms, where

it is used as a prime source of energy. Ascorbic acid is produced

enzymatically from this sugar in both plants and animals.

We can surmise that the production of ascorbic acid was an early accomplishment of the life process because of its wide distribution in nearly all present-day living organisms. It is produced in comparatively large amounts in the simplest plants and the most complex; it is synthesized in the most primitive animal species as well as in the most highly organized. Except possibly for a few microorganisms, those species of animals that cannot make their own ascorbic acid are the exceptions and require it in their food if they are to survive. Without it, life cannot exist. Because of its nearly universal presence in both plants and animals we can also assume that its production was well organized before the time when evolving life forms diverged along separate plant and animal lines.

This early development of the ascorbic acid synthesizing mechanisms probably arose from the need of these primitive living organisms to capture electrons from an environment with very low levels of oxygen. This process of scavenging for rare oxygen was a great advance for the survival and development of the organisms so equipped. It also may have triggered the development of the photo-synthetic process and sparked the tremendous development of plant life. This great increase in plant life, with its use of the energy of sunlight to produce oxygen and remove carbon dioxide from the atmosphere, completely changed the chemical composition of the atmosphere, over a period of possibly a billion years, from oxygen-free air which would not support living animals a we know them to a life-giving oxygen supply approaching the composition of our present atmosphere.

The increase in the oxygen content of the atmosphere had other important consequences. In the upper reaches of the atmosphere, oxygen is changed by radiation into ozone, which is a more active form of oxygen. This layer of high-altitude ozone acts as a filter to remove the deadly ultraviolet rays from sunlight and makes life on land possible. This series of events, which occurred more than 600 million years ago, preceded the tremendous forward surge of life and the development of more complicated, multicellular organisms in post-Cambrian times, as is seen in the fossil record.

The only living organisms that survive to this day in a form that has not progressed or evolved much from the forms which existed in the earliest infra-Cambrian times are primitive single-cell organisms, such as bacteria, which do not make (and may not need ascorbic acid in their living environment. All plants or animals which have evolved into complex multicellular forms make or need ascorbic acid. Was ascorbic acid the stimulus for the evolution of multicellular organisms? if not the stimulus, it certainly increased the biochemical adaptability necessary for survival in changing and unfavorable environments.

Further evidence for the great antiquity of the ascorbic acid-synthesizing systems may be obtained from the science of embryology. During its rapid fetal development, the embryo passes through the various evolutionary stages that its species went through in time. This led nineteenth-century embryologists to coin the phrase, "ontogeny recapitulates phylogeny," which is another way of saying the same thing. In fetal development, ascorbic acid can be detected very early, when the embryo is nothing more than a shapeless mass of cells. For instance, in the development of the chick embryo (which is convenient to work with), the chicken egg is devoid of ascorbic acid, but it can be detected in the early blastoderm stage of the growing embryo. At this stage the embryo is just a mass of cells in which no definite organs have as yet appeared, and it resembles the most primitive multicellular organisms -- both fossil and present living forms. In plants, also, the seeds have no ascorbic acid, but a soon as the plant embryo start to develop, ascorbic acid is immediately formed. Thus all the available evidence points to the great antiquity of the ascorbic acid-synthesizing systems in life on this planet.

2

_____________________________________________________________

If we reset the dials in our Time Machine and travel to a point about 450 million years ago, we may be able to witness the start of another notable experiment by Nature. In the seas are the beginnings of the vertebrates, a long line of animals that will eventually evolve into the mammals and man. These are the animals with a more or less rigid backbone, containing the start of a well-organized and complex nervous and muscular system, and capable of reacting much more efficiently to their environment than the swarms of simpler, spineless invertebrates, which had apparently reached the end of their evolutionary rope. Nature was ready to embark on another revolutionary, and more complicated, experiment.

Because of the increased complexity of their nervous system and a fast-acting muscular system, these primitive vertebrate fishes were able to gather food better and avoid enemies and other perils, all of which had increased survival value. Before they could do this, however, they had to develop complex, specialized organ systems in which various biochemical processes were carried out. And their requirements for ascorbic acid were undoubtedly much higher because of their much increased activity. The simpler structures of the invertebrates no longer sufficed and required much modification to suit the needs of these more active and alert upstarts, the vertebrates.

The vertebrate fishes were such a successful evolutionary experiment that for the next 100 million years or so they dominated the waters. Nature was now ready to carry out another experiment -- that of taking the animals out of the crowded seas and putting them on dry land. It had experience in this sort of operation since the plants had long ago left the seas and were well established on land. The land was no longer a place of barren fields, but was covered with dense vegetation. Two lines of modification were tried: in one, the fish was structurally modified so that it could clumsily exist out of the water; in the other, a more complete renovation job was done. Modifications of the fins and the swim bladder ended in the evolutionary blind alley of the lung fishes, but the more ambitious program -- involving a complete change in the biochemistry and life cycle -- produced a more successful line -- the amphibians. These creatures are born in the water and spend their early life there and then they metamorphose into land-living forms. Frogs and salamanders are present-day denizens of this group. The next step in evolution was to produce wholly land-living animals -- the reptiles. These were scaly animals that slithered, crawled, walked, or ran; and some grew to prodigious size. Some preferred swimming and reverted to the water and others took to the air. It was these airborne species that eventually evolved into the warm-blooded birds. The birds are of particular interest to us because they solved an ascorbic acid problem in the same fashion as the primitive mammals, which were appearing on the scene at about this time.

We have gone into this cursory sketch of this period of evolutionary history to trace the possible history of ascorbic acid in these ancient animals. If we assume that the present-day representatives of the amphibians, the reptiles, the birds, and the mammals have the same biochemical systems as their remote ancestors, then we can do some more detective work on our elusive molecule. These complex vertebrates all have well-defined organ systems that are assigned certain definite functions. Usually an organ has a main biological function and also many other accessory, but no less important, biochemical responsibilities. The kidney, whose main function is that of selective filtration and excretion, is also the repository of enzyme systems for the production of vitally important chemicals needed by the body. The liver, which is the largest organ of the body, functions mainly to neutralize poisons, produce bile, and act as a storage depot for carbohydrate reserves; but it also has many other duties to perform.

In examining present-day creatures we find that in the fishes, amphibians, and reptiles, the place where ascorbic acid is produced in the body is localized in the kidney. When we investigate the higher vertebrates, the mammals, we find that the liver is the production site and the kidneys are inactive. Apparently, during the course of evolution the production of enzymes for the synthesis of ascorbic acid was shifted from the small, biochemically crowded kidneys to the more ample space of the liver. This shift was the evolutionary response to the needs of the more highly developed species for greater supplies of this vital substance.

The birds are of particular interest because they illustrate this transition. In the older orders of the birds, such as the chickens, pigeons, and owls, the enzymes for synthesizing ascorbic acid are in the kidneys. In the more recently evolved species, such as the mynas and song birds, both the kidneys and the liver are sites of synthesis; and in other species only the liver is active and the kidneys are no longer involved in the manufacture of ascorbic acid. Thus we have a panoramic picture of this evolutionary change in the birds, where the process has been "frozen" in their physiology for millions of years.

This evolutionary shift from the kidneys to the liver took place at a time when temperature regulatory mechanisms were evolving and warm-blooded animals were developing from the previous cold-blooded vertebrates. In the cold-blooded amphibians and reptiles, the amounts of ascorbic acid that were produced in their small kidneys sufficed for their needs. However, as soon as temperature regulatory means were evolved -- producing the highly active, warm-blooded mammals -- the biochemically crowded kidneys could no longer supply ascorbic acid in ample quantities. Both the birds and the mammals, the two concurrently evolving lines of vertebrates, independently arrived at the same solution to their physiological problem: the shift to the liver.

3

_____________________________________________________________

If we come forward to a time some 55 to 65 million years ago we will find that the warm-blooded vertebrates are the dominant animals, and they are getting ready to evolve into forms that are familiar to us. Life has come a long way since it discovered how to make ascorbic acid. In the warmer areas the vegetation is dense and the ancestors of our present-day primates -- the monkeys,apes, and man -- shared the forests and treetops with the innumerable birds.

At about this time something very serious happened to a common ancestor of ours, the animal who would be a progenitor of some of the present primates. This animal suffered a mutation that eliminated an important enzyme from its biochemical makeup. The lack of this enzyme could have proved deadly to the species and we would not be here to read about it except for a fortuitous combination of circumstances.

Perhaps we should digress here and review some facts of mammalian biochemistry as related to this potentially lethal genetic accident. It will not be difficult, and it will help in understanding the thesis of this book.

All familiar animals are built from billions of cells. Masses of cells form the different tissues, the tissues form organs, and the whole animal is a collection of organs. The cell is the ultimate unit of life. Each cell has a cell membrane, which separates it from neighboring cells and encloses a jellylike mass of living stuff. Floating in this living matter is the nucleus, which is something like another, smaller cell within the cell. This nucleus contains the reproducing macromolecule called desoxyribonucleic acid (DNA). DNA is the biochemical basis of heredity and determines whether the growing cells will develop into an oak tree, a fish, a man or whatever. This molecule is a long, thin, double-stranded spiral containing linear sequences of four different basic unit molecules. The sequence of the four unit molecules as they are arranged on this spiral is the code that forms the hereditary blueprint of the organism. When a cell divides, this double-stranded molecule separates into two single strands and each daughter cell receives one. in the daughter cell, the single strand reproduces an exact copy of itself to again form the double strand and in this way each cell contains a copy of the hereditary pattern of the organism.

These long threadlike molecules are coiled and form bodies in the nucleus that were called chromosomes by early microscopists because they avidly absorbed dyes and stains and thus became readily visible in preparations viewed microscopically. These microscopitst suspected that these bodies were in some way connected with the process of inheritance but did not know the exact mechanisms.

Certain limited sections of these long, spiral molecules, which direct or control a single property such as the synthesis of a single enzyme, are called genes. A chromosome may be made up of thousands of genes. The exact order of the four different unit molecules in a gene determines, say, the protein structure of an enzyme. If only one of these unit molecules is out of place or transposed among the thousands in a gene sequence, the protein structure of the enzyme will be modified and its enzymatic activity may be changed or destroyed. Such a change in the sequence of a DNA molecule is called a mutation.

These mutations can be produced experimentally by means of various chemicals and by radiations such as X rays, ultraviolet rays, or gamma rays. Cosmic rays, in nature, are not doubt a factor in inducing mutations. It is on these mutations that Nature has depended to produce changes in evolving organisms. If the mutation is favorable and gives the plant or animal an advantage in survival, it is transmitted to its descendants. If it is unfavorable and produces death before reproduction takes place, the mutation dies out with the mutated individual and is regarded as a lethal mutation. Some unfavorable mutations which are serious enough to be lethal, but which the mutated animal survives, are called conditional lethal mutations. This type of mutation struck a primitive monkey that was the ancestor of man and some of our present-day primates.

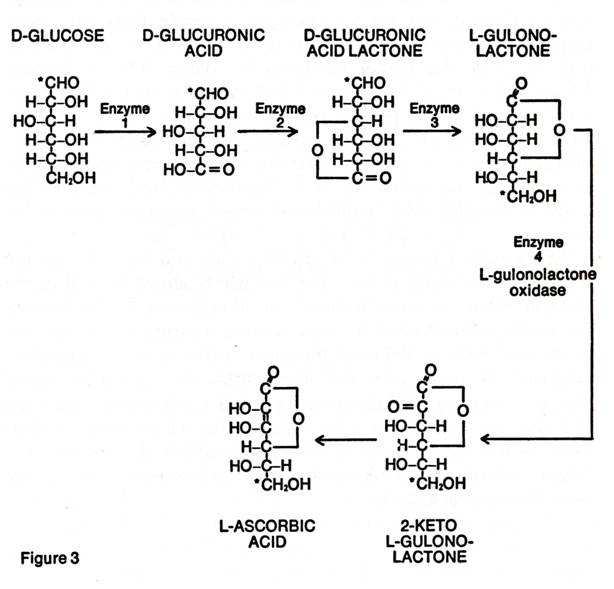

In nearly all the mammals, ascorbic acid is manufactured in the liver from the blood sugar, glucose. The conversion proceeds stepwise, each step being controlled by a different enzyme. The mutation that occurred in our ancestral monkey destroyed his ability to manufacture the last enzyme in this series -- L-gulonolactone oxidase. This prevented his liver from converting L-gulonolactone into ascorbic acid, which was needed to carry out the various biochemical processes of life. The lack of this enzyme made this animal susceptible to the deadly disease, scurvy. To this day, millions of years later, all the descendants of this mutated animal, including man, have the intermediate enzymes but lack the last one. And that is why man cannot make ascorbic acid in his liver.

This was a serious mutation because organisms without ascorbic acid do not last very long. However, by a fortuitous combination of circumstances, the animal survived. First of all, the mutated animal was living in a tropical or semitropical environment where fresh vegetation, insects, and small animals were available the year round as a food supply. All these are good dietary sources of ascorbic acid. Secondly, the amount of ascorbic acid needed for mere survival is low and could be met from these available sources of food. This is not to say that this animal was getting as much ascorbic acid from its food as it would have produced in its own body if it had not mutated. While the amount may not have been optimal, it was sufficient to ward off death from scurvy. Under these ideal conditions the mutation was not serious enough to have too adverse an effect on survival. It was only later when this animal's descendants moved from these ideal surroundings, this Garden of Eden, and became "civilized" that they -- we -- ran into trouble.

This defective gene has been transmitted for millions of years right up to the present-day primates. This makes man and a few other primates unique among the present-day mammals. Nearly all other mammals manufacture ascorbic acid in their livers in amounts sufficient to satisfy their physiological requirements. This had great survival value for these mammals, who, when subjected to stress, were able to produce much larger amounts of ascorbic acid to counteract adverse biochemical effects. And there was plenty of stress for an animal living in the wild, competing for scarce food, and trying to avoid becoming a choice morsel for some other predator.

To the best of our knowledge, only two other non-primate mammals have suffered a similar mutation and have survived. How many others may have similarly mutated and died off, we shall never know. The guinea pig survived in the warm lush forests of New Guinea where vegetation rich in fresh ascorbic acid was readily available. The other mammal is a fruit-eating bat (Pteropus medius) from India. The only other vertebrates that are known to harbor this defective gene are certain passeriforme birds.

Because of this missing or defective gene, man, some of the other primates, the guinea pig, and a bat will develop and die of scurvy if deprived of an outside source of ascorbic acid. A guinea pig, for example, will die a horrible death within two weeks if totally deprived of ascorbic acid in its diet.

4

_____________________________________________________________

In the last chapter it was estimated that the conditional lethal mutation which destroyed our ancestral monkey's ability to produce his own ascorbic acid occurred some 55 to 60 million years ago. Actually, we do not know which primate ancestor was afflicted with this mutation, nor can we exactly pinpoint this occurrence in the time scale. Up to the time of this writing, little work has been done to try to obtain this important data. However, for the purposes of our discussion, it is not essential to know exactly which of man's ancestors was burdened with this genetic defect nor when it happened. It is sufficient to know that it happened before man came on the scene. Presently available evidence indicates that the members of the primate suborder Anthropoidea, the old world monkeys, the new world monkeys, the apes and man, still carry this defective gene.

While this defect did not wipe out the mutated species, it must have put these animals at a serious disadvantage. Normally, a mammal equipped to synthesize its own ascorbic acid will produce it in variable amounts depending upon the stresses the animal is undergoing. These animals have a feedback mechanism that produces more when the animal needs more. The stresses of living under wild and unfavorable conditions were great. The animals had to continually keep out of the way of predators while they searched for food; they had to procreate and take care of their young; they had to resist such environmental stresses as heat, cold, rain, and snow, and the biological stresses of parasites and diseases of bacterial, fungal, and viral origin. Animals that were able to cope with these stresses by producing optimal amounts of ascorbic acid within their bodies were more resistant to environmental extremes of heat and cold, better able to fight off infections and disease, better able to recover from physical trauma, and better prepared to do it repeatedly. The presence of ascorbic acid-synthesizing enzymes conferred enormous powers of survival.

It is likely that our mutated ancestors never got enough ascorbic acid from their food to completely combat all the biochemical stresses. It is equally certain that the amounts they ingested were enough to ensure their survival until they were able to reproduce and raise their young. Their genetic defect must surely have been a serious handicap in their fight for survival, but they did survive.

If we follow the trunk of the evolving primate tree, we come to animals that we know from their fossil remains. Propliopithecus, Proconsul, Oreopithecus, and Ramapithecus, to name a few, may have been the ancestors of the present-day higher primates. These animals were more ape than man, but we are coming closer. About a million years ago we find Australopithecus of Southern Africa. These creatures were no longer living an arboreal existence, swinging through the branches of the forest; they had come down to earth and lived in the wide-open spaces of the grassy plains. While in appearance they still resembled chimpanzees, they held their heads erect, not thrust forward; and they were built to walk erect most of the time. Their hands had fingers too slender to walk upon and their jaws contained teeth more human-like.

In the last million years, evolution developed recognizably human forms. Heidelberg man, Pithecanthropus, Sinanthropus, Swanscombe man, and others bring us to a point about 100,000 years ago. From here on we find the remains of manlike creatures widely distributed: Neanderthal man in the Near East and Europe; Rhodesian man in South Africa; and later, 20,000 to 40,000 years ago, modern man in Europe, Asia, South Africa, and America. The Neanderthal man of 50,000 to 70,000 years ago was a great hunter and went after the biggest and fiercest animals, the woolly rhinoceroses and mammoths. Appetites and diets evolved from the vegetation, fruits, nuts, and insects of the arboreal monkeys, to the raw red meat of these primitive hunters. Fresh raw meat is a good source of ascorbic acid, and Neanderthal man needed plenty of it to survive the climate that had started to turn cold, and the glaciers that covered most of Europe about 50,000 years ago. From a study of a Neanderthal skeleton found in France about a half century ago, it was concluded that Neanderthal man did not stand erect but walked with knees bent in an uncertain, shuffling gait. However it was later discovered that this skeleton was that of an older man with a severe case of arthritis -- fossil evidence of a pattern which might indicate the lack of sufficient ascorbic acid to overcome the stresses of Ice-Age cold and infection.

The evolution of the nervous system and the explosive development of the brain and intelligence compensated in some measure for this biochemical defect by finding new sources of ascorbic acid. The normally herbivorous and insect-eating species became hunters after raw red meat and fish, and later went on to raise their own animals. It was this change in dietary practice that permitted the wide dispersion of the humanoids. They were no longer limited to tropical or semitropical areas where plants or insects rich in ascorbic acid were available the year round. To gauge the effect of this factor, just compare the wide dispersion of man on the face of this globe with his less adaptable primate relatives, the monkeys and apes. They are still swinging in the branches close to their supply of ascorbic acid.

This dispersal of primitive man into environs for which he was not biochemically adapted was not easy and was only accomplished at a high cost -- increased mortality, shortened life span, and great physical suffering. Man's survival under these unfavorable conditions is a tribute to this guts and brainpower. Here was one of Nature's first experiences with an organism that could fight back against an unfavorable environment and win. But the victory, as we can see all around us, was a conditional one.

A study of the remains found in ancient burial grounds reveals some of the privations suffered by Stone Age man and his descendants. Living in the temperate zone, the cold was a constant threat to his existence. The exhumed bones give evidence of much disease, nutritional deficiencies, and general starvation. Infant and child mortality was enormous, and the life span of those who survived their teens was rarely beyond thirty or thirty-five years. Some abnormalities evidenced in the fossilized limbs of these ancient populations could have been caused by recurring episodes of inadequate intake of ascorbic acid. Future studies in paleopathology should bring additional interesting facts to light.

Another way of assessing the extent of the ascorbic acid nutriture of these ancient peoples is to find out what current primitive societies used as food, and what their methods of food preparation and preservation were before "civilization" reached them: the Australian aborigine, the native tribes of Africa, the Indians of the Americas, and the Eskimo, who has worked out a pattern for survival in a most unfavorable environment.

In reviewing the diets of these peoples, one is impressed by their variety. All products of the plant and animal kingdoms,without exception, were at one time or another consumer. Those who ate their food fresh and raw had more chance of obtaining ascorbic acid. Extended cooking or drying tends to destroy ascorbic acid. The availability of food appears to have been the main factor in an individual's nutrition and, aside from certain tribal taboos, there were no aesthetic qualms against eating any kind of food. A luscious spider's abdomen bitten off and eaten raw, a succulent lightly toasted locust consumed like a barbecued shrimp, the larva of the dung beetle soaked in coconut milk and roasted were good sources of ascorbic acid. similarly, raw fish, seaweed, snails, and every variety of marine mollusk were tasty morsels for those peoples living near the shores.

The name "Eskimo" comes from the Cree Indian work "uskipoo," meaning "he eats raw meat." During the long winter, Eskimos depended upon the ascorbic acid content of raw fish or freshly caught raw seal meat and blood. Any Eskimo who cooked his fish and meat -- thereby reducing its ascorbic acid content -- would never have survived long enough to tell about his new-fangled technique of food preparation. But, not even the best of these diets ever supplied ascorbic acid in the amounts that would have been produced in man's own liver if he had the missing gene. The levels were, as always, greatly submarginal for optimal health and longevity, especially under high-stress conditions. The estimated life expectancy for an Eskimo man in northern Greenland is only twenty-five years.

Two great advances in the early history of man were the development of agriculture and the domestication of animals. In the temperate zones, agriculture tended to concentrate on cereal grains or other seed crops, which could easily be stored without deterioration and used during the long winters. These crops are notably lacking in ascorbic acid and, while they supplied calories, scurvy would soon develop in those who depended on them as a staple diet. Whatever fresh vegetables or fruits were grown were generally rendered useless as an antiscorbutic foodstuff for winter use by the primitive methods of drying and preservation.

A trick for imparting antiscorbutic qualities to seed crops was discovered by various agricultural peoples and then forgotten. It was rediscovered again in Germany in 1912, and it has persisted among some Asiatic people. This simple life-saving measure was to take portions of these seed crops (beans and the like), soak them in water, and then allow them to germinate and sprout. The sprouted seeds were consumed. Ascorbic acid is required by the growing plant and it is one of the first substances that is synthesized in the growing seed to nourish the plant embryo. Bean sprouts are even now a common item of the Chinese cuisine as they have been for thousands of years; but they contain our elusive molecule, while unsprouted beans do not.

The early people whose culture was based upon animal husbandry may have fared better in the winter than the agricultural people. they had a built-in continuous ascorbic acid supply in their fresh milk, fresh meat, and blood. If they used these products fresh they were safe, but if they attempted long preservation, the antiscorbutic properties were lost and the foodstuffs became potential poisons.

All in all, it has been a terrible struggle throughout prehistory and history to obtain the little daily speck of ascorbic acid required for mere survival.

5

_____________________________________________________________

FROM PREHISTORY TO THE EIGHTEENTH CENTURY

Now we come to man in historical times and find that he has been plagued by the effects of this genetic defect from the earliest days of recorded history. Before discussing this great scourge of mankind, let us examine the disease caused by this genetic defect. Clinical scurvy is such a loathsome and fatal affliction that it is difficult to conceive that an amount of ascorbic acid that could be piled upon the head of a pin is enough to prevent its fatal effects.

In describing the disease we must distinguish between chronic scurvy and acute scurvy. Chronic or biochemical scurvy is a disease that practically everyone suffers from and its individual severity depends upon the amount of one's daily intake of ascorbic acid. It is a condition where the normal biochemical processes of the body are not functioning at optimal levels because of the lack of sufficient ascorbic acid. There are all shades of biochemical scurvy and it can vary from a mild "not feeling right" to conditions where one's resistance is greatly lowered and susceptibility to disease, stress, and trauma is increased. The chronic form usually exists without showing the clinical signs of the acute form and this makes it difficult to detect and diagnose without special biochemical testing procedures. The acute form is the "classical" scurvy recognized from ancient times and is due to prolonged deprivation of ascorbic acid, usually combined with severe stress.

The first symptom of acute scurvy in an adult is a change in complexion: the color becomes sallow or muddy. There is a loss of accustomed vigor, increased lassitude, quick tiring, breathlessness, a marked disinclination for exertion, and a desire for sleep. There may be fleeting pains in the joints and limbs, especially the legs. Very soon the gums become sore, bleed readily, and are congested and spongy. Reddish spots (small hemorrhages) appear on the skin, especially on the legs at the sites of hair follicles. Sometimes there are nosebleeds or the eyelids become swollen and purple or the urine contains blood. These signs progress steadily -- the complexion becomes dingy and brownish, the weakness increases, with the slightest exertion causing palpitation and breathlessness. The gums become spongier and bleed, the teeth become loose and may fall out, the jawbone starts to rot,and the breath is extremely foul. Hemorrhages into any part of the body may ensue. Old healed wounds and scars on the body may break open and fresh wounds and sores show no tendency to heal. Pains in the limbs render the victim helpless. The gums swell so much that they overlay and hide the teeth and may protrude from the mouth. The bones become so brittle that a leg may be broken by merely moving it in bed. The joints become so disorganized that a rattling noise can be heard from the bones grating against each other when the patient is moved. Death usually comes rapidly from sudden collapse at slight exertion or from a secondary infection, such as pneumonia. This sequence of events, from apparent health to death, may take only a few months.

Acute clinical scurvy was recognized early by

ancient physicians and was probably known long before the dawn of recorded

history. Each year in the colder climes, as winter closed in, the

populations were forced onto a diet of cereal grains and dried or salted meat

or fish, all low in ascorbic acid. Foods rich in ascorbic acid were

scarce if not entirely lacking. The consequence of this inadequate diet

was that near the end of winter and in early spring, whole populations were

becoming increasingly scorbutic. Thus weakened, their resistance low,

people were easy prey for the rampant bacterial and viral infections that

decimated the population. This happened year after year for centuries and

this is the origin of the so-called "spring tonics," which were

attempts to alleviate this annually recurring scurvy (by measures which were

generally ineffective). The number of lives lost in this annual debacle

and the toll in human misery are inestimable. People became so accustomed to

this recurring catastrophe that it was looked upon as the normal course of

events and casually accepted as such. Only in times of civil strife, of

wars and sieges, or on long voyages, where the toll in lives lost to this dread

disease was so great, did it merit special notice.

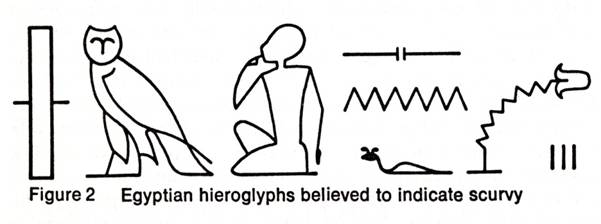

The earliest written reference to a condition that is recognizable as scurvy is in the Ebers papyrus, a record of Egyptian medical lore written about 1500 B.C. Figure 2 shows various Egyptian hieroglyphs for scurvy. The figure of the little man pointing to his mouth and the lips oozing blood indicated the bleeding gums of the disease. It is likely that scurvy was clearly recognized at least 3,000 years ago. Hippocrates (ca. 400 B.C.), the father of medicine, described diseases that sounded suspiciously like scurvy. Pliny the Elder (A.D. 23-79), in his Natural History, describes a disease of Roman soldiers in Germany whose symptoms bespeak of scurvy and which was cured by a plant herba Britannica. Sire Jean de Joinville, in his history of the invasion of Egypt by the Crusaders of Saint Louis in 1260, gives a detailed description of the scurvy that afflicted this army. He mentions the hemorrhagic spots, the fungous and putrid gums, and the legs being affected. It was scurvy that led to the ultimate defeat and capture of Saint Louis and his knights. It is certain that, throughout the Crusades, scurvy took a far greater toll of the Crusaders than all the weapons of the Saracens.

In the great cycle of epidemics that hit Europe in the fourteenth century -- the Black Death of the Middle Ages -- millions of people died. The Black Death was a fulminating, virulent epidemic of a bacterial disease, bubonic plague, concurrent with pulmonary infection superimposed on scurvy with its diffused superficial hemorrhages that caused the skin to turn black or bluish-black. The fact that the disease attacked a population that was first thoroughly weakened by scurvy accounts for the extremely high mortality: one-fourth of the population of Europe -- or 25 million deaths. it is known that resistance to infection is lowered by a deficiency of ascorbic acid, so a disease which would normally be a mild affliction could whip through a scorbutic population with unprecedented fierceness and fatally strike down thousands.

With the invention of the printing press and the easier dissemination of the printed word, the sixteenth and following centuries saw the appearance of many tracts that described scurvy and its bizarre causes, and offered many different exotic treatments and "cures" for the disease. Much earlier, folklore had associated scurvy with a lack of fresh foodstuffs, and the antiscorbutic qualities of many plants had been known. But these qualities were forgotten and had to be rediscovered again and again at a great cost in lives and suffering.

Improved ship construction and the ensuing long voyages provided ideal conditions for the rapid development of acute scurvy under circumstances where the developing symptoms could be readily observed and recorded. Sailors quickly succumbed to scurvy due to inadequate diet; physical exertions; exposure to extremes of heat, cold, and dampness; and the generally unsanitary shipboard conditions. In a few short months, out of what started off as a seemingly healthy crew, only a few remained fit for duty and were able to stand watch. The logs of these voyages make incredible reading today. Before scurvy was finally controlled, this scourge destroyed more sailors than all other causes, including the extremely high tolls of naval warfare.

In 1497, Vasco da Gama, while attempting to find a passage to the Indies by way of the Cape of Good Hope, lost 100 of his 160-man crew to scurvy. Magellan, in 1519, set sail with a fleet of five ships on one of history's great voyages, the circumnavigation of the earth. Three years later, only one ship, with only eighteen members of the original crew, returned to Spain. On occasion, a Spanish galleon would be found drifting, a derelict, its entire crew dead of scurvy. Many books were written on scurvy during the sixteenth to eighteenth centuries; some authors hit upon means to actually combat the disease, while others, clouded by the medical lore of the times, were way off base.

We still find logs of eighteenth-century voyages that recount the devastating effects of scurvy, and others where the master of the ship was able to prevent the disease. In 1740, Commodore Anson left England with six vessels and 1500 seamen; he returned four years later with one ship and 335 men. Between 1772 and 1775, on his round-the-world trip, however, Captain James Cook lost only one man out of his crew of 118, and that one not from scurvy. Cook took every opportunity when touching land to obtain supplies of fresh vegetables and fruit. He usually had a good store of sauerkraut aboard and he knew the beneficial qualities of celery and scurvy grass. After the voyage was completed, Cook was presented with the Copley Medal of the Royal Society. This award was given for his success in making such a lengthy voyage without a single death from scurvy -- not for his great navigational and geographic discoveries. His scientific contemporaries understood the great significance of Cook's accomplishment. Between the time of Anson's failure and Cook's great success another important event took place; the first modern type of medical experiment was carried out by James Lind. We will discuss this later.

This is only a very brief record of the easily avoidable havoc caused by scurvy on the high seas, but those on land fared little better. In addition to the fearful, annually recurring scorbutic devastation of the population in the late winter and early spring, there were special circumstances which brought on deadly epidemics of acute scurvy. Wars and long sieges brought these epidemics to a head. In a brief sampling of the wars of the sixteenth to eighteenth century, scurvy appeared at the siege of Breda in Holland in 1625 and at Thorn in Prussia in 1703, where it accounted for 5,000 deaths among the garrison and noncombatants. It took its toll of the Russian armies in 1720 in the war between the Austrians and the Turks, of the English troops that captured Quebec in 1759, and of the French soldiers in the Alps in the spring of 1795.

Witness also Jaques Cartier's expedition to Newfoundland in 1535. These were men from a civilized culture with a long background of medical lore. One hundred of Cartier's 110 men were dying of scurvy until the Indian natives showed him how to make a decoction from the tips of spruce fir which cured his men. This trick, incidentally, was also used by the defenders of Stalingrad in World War II to stave off scurvy.

By the middle of the eighteenth century ,the stage had been prepared for advances in the prevention and treatment of scurvy. Admiral Sir Richard Hawkins, in 1593, protected the crew of the Dainty with oranges and lemons; among others, Commodore Lancaster, in voyages for the East India Company, had shown by 1600 that scurvy was an easily preventable disease. It remained, however, for James Lind to prove this. James Lind, surgeon's mate on H.M.S. Salisbury, was inspired by the hardships of the Anson fiasco and the many cases of scurvy he had treated on his own ships. Lind was a keen observer and eventually became known as "the father of nautical medicine." He conducted the first properly controlled clinical therapeutic trial on record.

The crucial experiment Lind performed in 1747 at sea on the Salisbury, was to take twelve seamen suffering from the same degree of scurvy and divide them into six groups of two each. In addition to their regular diet, he gave each group a different, commonly used treatment for scurvy and observed its action. One group received a quart of cider daily, the second group received twenty-five drops of dilute sulfuric acid three times a day, the third group was given two spoonfuls of vinegar three times a day, the fourth team drank half a pint of seawater three times a day, the fifth received a concoction of garlic, mustard seed, horseradish, gum myrrh, and balsam of Peru. The last group received two oranges and one lemon daily for six days. These last two men improved with such astonishing rapidity that they were used as nurses to care for the others. There was slight improvement in the cider group but no benefit was observed in the others. Here was clear-cut, easily understandable evidence of the value of citrus fruit in the cure of scurvy. Although Lind did not realize it, he had found a good natural source of our elusive molecule.

Lind left the Royal Navy in 1748, obtained an M.D. degree from Edinburgh University, and went into private practice. He was later physician at the Royal Naval Hospital at Haslar and physician to the Royal Household of George III at Windsor. He continued his work on scurvy and, in 1753, published one of the classics of medical literature, A Treatise of the Scurvy. What as clear to Lind, and is commonplace to us, was not so readily accepted by the naval bureaucracy of his day. It took over forty years for the British Admiralty to adopt Lind's simple prophylactic daily dose of one ounce of lemon juice per man. The official order cam through in 1795, just a year after Lind's death. It has been estimated that this 42-year delay cost the Royal Navy 100,000 casualties to scurvy.

This simple regimen wiped out scurvy in the naval forces of England, and it became their secret weapon for maintaining their mastery of the seas. There is no doubt that this simple ration of lemon juice was of far greater importance to the Royal Navy of the eighteenth and nineteenth centuries than all the improvements in speed, firepower, armor, and seaworthiness. Naval officers of the time asserted that it was equivalent to doubling the fighting force of the navy. Previously, because of the ravages of scurvy, the seagoing fleets had to be relieved every ten weeks by a freshly manned fleet of equal strength so that the scorbutic seamen could be brought home for rehabilitation. The impact of our elusive molecule on history has never been adequately evaluated. In this case Lind did as much as Nelson to break the power of Napoleon. The English vessels were able to maintain continuous blockade duties, laying off the coast of France for months at a time without the necessity of relieving the men. Were it not for Lind, the flat-bottomed invasion barges assembled by Napoleon may well have crossed the English Channel.

6

_____________________________________________________________

EARLY TWENTIETH CENTURIES

One would imagine that Lind's clear-cut clinical demonstration and the experiences of the Royal Navy in wiping out the disease would have pointed the way toward banishing this disease completely. However, it takes much more than logic and clear-cut demonstrations to overcome the inertia and dogma of established thought. The forty-two years that it took the British Admiralty to adopt Lind's recommendations may seem unduly long, even for a stolid bureaucracy, but this may be a speed record compared with other agencies. The British Board of Trade took 112 years -- until 1865 -- before similar precautions were adopted for the British merchant marine. There are records of seamen on the merchant vessels succumbing to scurvy even while delivering lemons to the ships of the Royal Navy. Over 30,000 cases of scurvy were reported in the American Civil War and it took the U.S. Army until 1895 to adopt antiscorbutic rations.

The saga of scurvy continues, with its incredible toll in human lives and suffering, up to the present day. In the nineteenth century, 104 land epidemics have been tabulated. And the twentieth century has had plenty of trouble with the disease, not only as a result of the World Wars but in civil populations, as a result of ignorance and improper use of food.

In the latter part of the nineteenth century Barlow's disease became prevalent, and it was recognized as infantile scurvy. It appeared at the time when artificial feeding of babies was becoming popular, and took the name of the doctor who described the disease in 1883. Actually, the disease was first described in 1650, but it was confused with rickets until Barlow's clear differentiation. There were so many cases that it was also known as Mueller's or Cheadle's disease. Breast-fed babies did not appear to get the disease, but those fed with boiled and heated cow's milk or with cereal substitutes did. It is an intensively painful disease and results in stunted growth and delayed development. The disease persists to the present day and is amenable to the same preventive and curative measures as adult scurvy -- the speck of ascorbic acid contained in fresh fruits and vegetables. Here again the lesson had to be relearned, the hard way, with babies put in the same class as seamen.

Scurvy and its treatment has had many ups and downs during the long history of man, and one series of events in the nineteenth century raised some mistrust in the prophylactic powers of fresh fruits. This is another example of the kind of mistaken conclusions based on confusion, incomplete observations, and improper interpretations which has cursed the history of scurvy. At the time this took place all knowledge of scurvy was completely empirical; there was no experimental or quantitative data because there were no experimental animals known that could be given scurvy. We did not know any more than Lind knew a hundred years before.

The English used the term "lime" indiscriminately for both lemons and limes. In 1850, for political and economic reasons they substituted the West Indian lime for the traditional Mediterranean lemon used by the Royal Navy since 1795. We now know that the Mediterranean lemon is a good source of our elusive molecule, while the West Indian lime is not. In 1875 the Admiralty supplied a large amount of West Indian lime juice to Sir George Nare's expedition to discover the North Pole. An epidemic of scurvy broke out and ruined the expedition. A commission was appointed to inquire into the cause of the disaster but could arrive at no satisfactory conclusion. It was even more perplexing because a previous Arctic expedition in 1850 (the date is important because lemons were used) had spent two years of great hardships, but without scurvy. It took until 1919 to finally resolve the cause of this debacle; in the meantime, however, this incident provoked a general and indiscriminate distrust of antiscorbutics -- especially among Polar explorers. On the Jackson-Harmsworth expedition of 1894-1897, a party carrying no lime juice, but eating large amounts of fresh bear meat, remained in good health. The crew left on the ship, taking their daily lime juice and subsisting on canned and salted meat, came down with scurvy. This led to the theory that scurvy was caused by tainted meat. Thus, in 1913, the Antarctic explorer, Captain Scott, and his companions suffered miserable deaths because their expedition was provisioned on the basis of the tainted meat theory and carried no antiscorbutics.

Because of the lack of accurate knowledge concerning our elusive molecule, many other odd theories on scurvy were proposed, some as late as the 1910s when we should have known better. One theory claimed that scurvy was due to an "acid intoxication" of the blood, another that it was a bacterial autointoxication, and as late as 1916, someone "discovered" its bacterial origin. Probably the crowning nonsense was put forward in 1918, when it was claimed that constipation was the cause of scurvy. After World War I, two German doctors who had been assigned to care for Russian prisoners of war came up with the novel idea that scurvy was transmitted by vermin; apparently the Russians had both.

Aside from these blunders, the nineteenth century saw many great advances in the sciences. The germ theory of disease was established after much initial resistance from the medical dogma of the time. And progress was made in the scientific study of nutrition. A brief historical review of the science of nutrition will provide the reader with some background to better understand the theme of this book.

In the early years of the nineteenth century, experiments were conducted in which animals were fed purified diets of the then known food constituents: fats, carbohydrates, and proteins. But the animals did not thrive. Not only did they not grow well but they also developed an opacity of the cornea of the eye (which we now know is due to a vitamin A deficiency). Later in the century, in 1857 in Africa, Dr. Livingstone noted a similar eye condition in poorly fed natives. Later, about 1865, similar observations were made of slaves on South American sugar plantations. The condition was attributed to some toxic constituent in their monotonous diet rather than the lack of some element. Most of the investigators in the growing science of nutrition concentrated on learning the basic facts about calories and the utilization of fats, carbohydrates, and proteins in the diet. Why a purified diet, adequate in these elements, would not support life, long remained a mystery.

In the latter decades of the nineteenth century and early in the twentieth century, many important observations were made which helped unravel this mystery. The Japanese Tahaki showed that the disease beriberi, then afflicting 25 to 40 percent of the Imperial Japanese Fleet, could be prevented by adding meat, vegetables, and condensed milk to the customary diet of rice and fish. He missed the significance of his results because he believed the improvement was due to a higher calorie intake comparable to that of the German and British navies. In 1897, the Dutchman Eijkman, working in Batavia, was able to produce beriberi in chickens by feeding them polished rice (rice from which the husk coating is removed) and was able to cure the birds by giving them extracts of the husk or polishings. But he did not interpret his experiments correctly either. He thought that the polished rice contained a poison and the polishings contained a natural antidote. Four years later another Dutchman correctly interpreted these experiments, suggesting that beriberi in birds and men is caused by the lack of some vital substance in the polished rice that is present in the rice bran.

In 1905 and 1906, Pekelharing in Holland and Hopkins at Cambridge, England, repeated the old experiment of feeding rats and mice on purified diets and again found that they failed to grow and died young. But they went one step further and found that adding small amounts of milk, not exceeding four percent of the diet, allowed the animals to grow and live. Both investigators realized that there was something present in natural foods that is vitally important to good nutrition. The concept of deficiency diseases (the idea that a disease could be caused by something lacking in the diet) was dawning.

Two Norwegian workers, Holst and Frohlich, in 1907, were also investigating beriberi, which was common among the sailors of the Norwegian fishing fleet. They were able to produce the disease in chickens and pigeons but they wanted another experimental animal, a mammal, to work with. They chose the guinea pig, and a fortunate choice it was for man and the science of nutrition. After feeding the guinea pigs the beriberi-inducing diets, they found that the guinea pigs rapidly came down with scurvy instead. This was a startling discovery, because up to this point it was believed that man was the only animal that could contract scurvy. This was also a very valuable and practical contribution because now an experimental animal was available that could be used for all sorts of exact and quantitative studies of scurvy. It also showed that there was something very similar an unique about the physiology of the guinea pig and man. This simple observation was the greatest advance in the study of scurvy since the experiments of Lind in the 1740s. This discovery could have been made twelve years earlier had Theobald Smith, the famous American pathologist, realized the importance of his observation that guinea pigs fed a diet of oats developed a hemorrhagic disease. But he failed to relate the bleeding of his guinea pigs with human scurvy.

There were many brilliant workers in the field of nutrition, but we need only mention one other in the thread of occurrences that led to the present misconceptions regarding our elusive molecule. Casimir Funk, working in the Lister Institute, prepared a highly concentrated rice-bran extract for the treatment of beriberi and designated the curative substance in this extract as a "vitamine." In 1912-1913 he published his then radical theory that beriberi, scurvy, and pellagra and possibly rickets and sprue were all "deficiency diseases," caused by the lack of some important specific trace factor in the diet. Subsequent work divided these factors into three groups: vitamin A, the fat-soluble antiophthalmic factor; vitamin B, the water-soluble antineuritic substance; and vitamin C, the water-soluble antiscorbutic material. It was not known for many years whether each vitamin represented a single substance or many. Vitamin B was later found to consist of a group of chemically diverse compounds, while both vitamin A and vitamin C were single substances. Time added more "letters" to the vitamin alphabet. Eventually the different vitamins were isolated, purified, and their chemical structures determined and finally synthesized: but this took many years.

In the early decades of the twentieth century, ascorbic acid was still unknown. The sum total of our knowledge was about equal to Lind's but great changes were in store.

7

_____________________________________________________________

In 1907, with the discovery that guinea pigs were also susceptible to scurvy, experimental work heretofore impossible could be conducted in the study of the disease. Foods could be assayed to determine the amounts of antiscorbutic substance they contained. The general properties of our elusive molecule could be studied by using various chemical treatments on the antiscorbutic extracts and following them with animal assays to learn why the molecule was so elusive and sensitive.

The bulk of the experiments on scurvy in the early years of the twentieth century was carried out by nutritionists who had contrived the vitamin hypothesis and the concept of deficiency diseases. They had already taken scurvy under their wing as a typical dietary deficiency disease. They had even named its cause and cure, vitamin C, without knowing anything more definite than the fact that it was some vague factor in fresh vegetables and fruits.

While the nutritionists were busy with their rats, mice, guinea pigs, and vitamin theories, another important event took place in medicine. We will mention it briefly here because it happened at about this time, and we will come back to it later to explain its significance to ascorbic acid and scurvy.

In 1908, the great English physician, Sir Archibald Garrod, presented a remarkable series of papers in which he set forth new ideas on inherited metabolic diseases, or as he stated it, "Inborn errors of metabolism." These are diseases due to the inherited lack of certain enzymes. This lack may cause a variety of genetic diseases depending upon which particular enzyme is missing. The fatality of these diseases depends upon the importance of the biochemical process controlled by the missing enzyme. It may vary from relatively benign to rapidly fatal conditions. This was a revolutionary concept for those days -- a disease caused by a biochemical defect in one's inheritance.

Now getting back to the main thread of our story, the nutritionists continued their work with their new-found experimental animals and in the next decades uncovered more information about our elusive molecule.

To study the chemistry of a substance such as ascorbic acid, it is necessary to concentrate it from the natural extracts, to isolate it in pure form, to crystallize it, and to recrystallize it to make sure it is just one single chemical compound. Only then can it be identified chemically, and its molecular structure determined. Once the structure of the compound is known, it is relatively easy to find ways to synthesize it. Comparison of the synthetic material with those of the original crystals will either confirm or deny whether the chemist's tests and reasoning were correct.

In the early 1920s scientists began concentrating the vitamin C factor and studying its behavior under various chemical treatments by means of quantitative animal assays. It was a long drawn-out procedures but it was gradually becoming clear why the molecule vanished so easily. The real tests, however, had to wait its isolation and crystallization in pure form.

As scientists approached the home stretch of the search their pace quickened and attracted more workers. There was a group at the Lister Institute in England and another in the United States; a Russian directed group in France and later other groups formed. History has a way of playing tricks on the course of events. In the years before our elusive molecule was finally pinned down, there were a few close calls in the attempts to isolate it in pure form which, for one reason or another, were never successful. In the early 1920s a student worker at the University of Wisconsin, studying the biochemistry of oats, isolated a crude crystalline fraction which may have been our elusive material. The work was carried no further because the dean refused a research grant of a few hundred dollars which was required to pay for animal assays of these crude crystals. In 1925, two U.S. Army workers at Edgewood Arsenal were on the verge of obtaining crystals of the antiscorbutic substance when they were transferred to different stations; their work was never completed. Bezssonov, the Russian worker in France, may have isolated antiscorbutic crystals from cabbage juice in 1925, but for some unknown reason these crystals were never thoroughly investigated.